Feeling stuck with Segment? Say 👋 to RudderStack.

Blogs

Announcing the Data Quality Toolkit: guarantee quality data from the source

Written by

Eric Dodds

Head of Product Marketing

Bad data will sabotage your efforts to turn customer data into competitive advantage. It creates expensive data wrangling and cleaning work that can span the entire data team and even require effort from business teams. This low-value work can keep you from key initiatives altogether, but choosing not to do it isn’t an option. Fueling your business with bad data only leads to lackluster results and frustrated stakeholders.

If you have a data quality problem, it can feel like a catch-22, but you don’t have to settle for the painful tradeoff. We created a Data Quality Toolkit to help you guarantee quality event data from the source, so your team can do their best work and help your business grow.

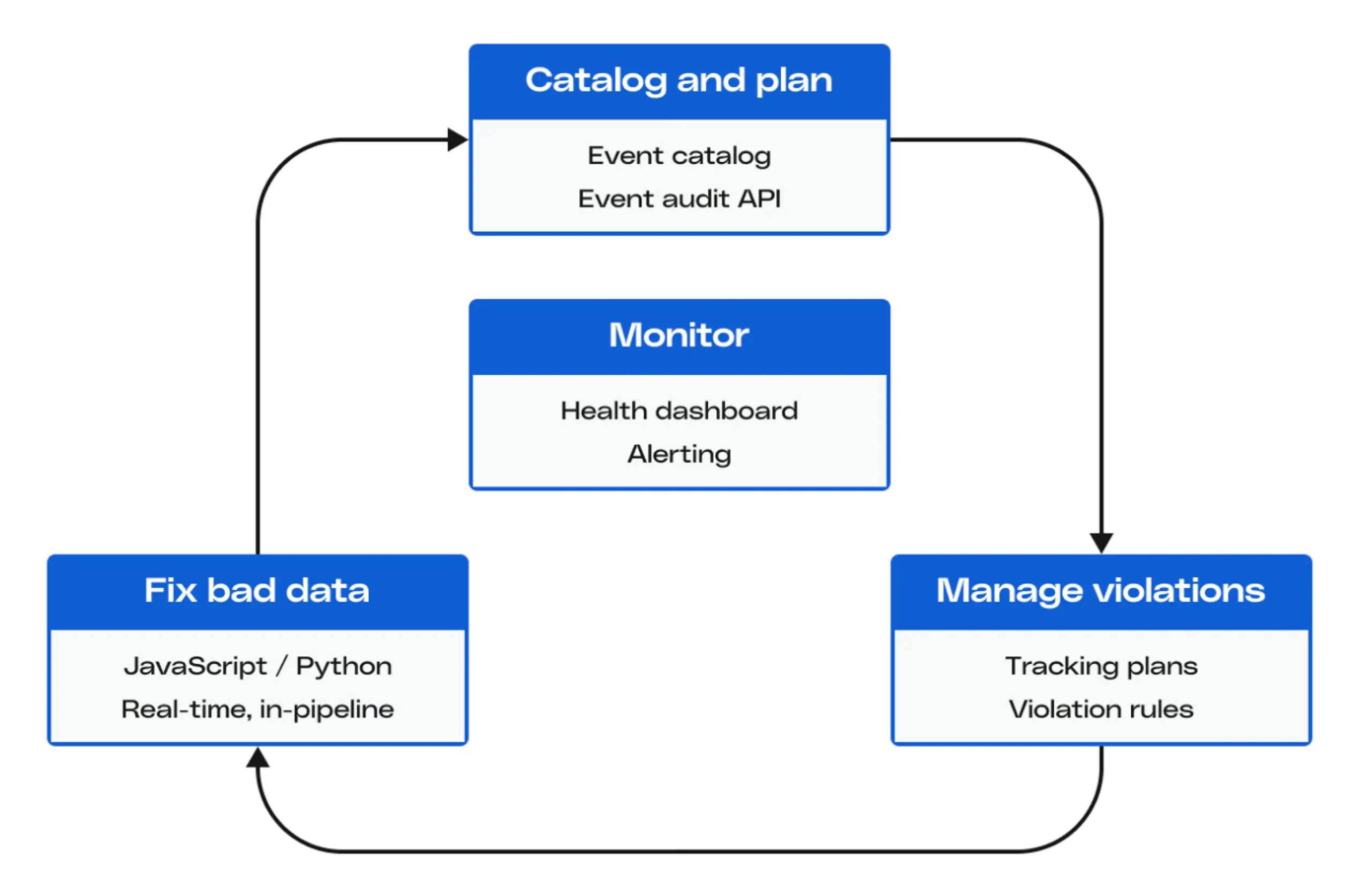

The toolkit is a set of features you can use to proactively manage data quality for your event data. It includes tools for:

- Collaborative event definitions

- Violation management

- Real-time schema fixes

- Centralized monitoring and alerting

I’ll provide an overview below, and next week we’ll release a new data quality feature every day, diving into specific functionality and use cases. We’ll wrap it all up with a webinar detailing each data quality workflow and providing an end-to-end product demo, so stay tuned.

The cost of bad data

Your competitors have access to the same growth levers that you do. Your customer data is one of the few remaining assets at your disposal that can’t be copied or replicated. It’s a secret weapon you can use to build differentiated products and experiences that drive growth and retention. Leading data teams leverage their customer data to deliver high-impact machine learning projects like churn prediction and personalized recommendation systems to create significant competitive advantages for their companies. If you have a data quality problem, though, success like this can seem out of reach.

In truth, these types of projects aren’t rocket science. They require expertise and effort, but the methods and tools needed to implement them are known and accessible. So, what makes success so elusive? The most common culprit is the data itself – garbage in, garbage out.

When your raw material is poor-quality data, you have to expend immense effort turning it into something usable before you can begin work on projects like churn prediction or recommendations. Your constraint, then, isn’t expertise or ability, it’s a lack of clean, workable data.

"We’ve found that four out of every eight hours in a data engineer’s day is spent on data cleanup. This is one of the biggest hidden costs modern companies face."

—Kunal Argwal, CEO of UnravelIf you have poor data quality, you’ll spend so much time just keeping the lights on that you won’t be able to create value. Some experts estimate that 50% of the typical data engineer’s time is wasted on this expensive data wrangling.

Guarantee quality data at the source with RudderStack

If bad data is keeping your team from delivering their best work and creating competitive advantages for your business, it’s best to start addressing the problem at the source. Our Data Quality Toolkit can help you manage the entire lifecycle of customer data quality starting at collection. I’ll overview each feature below.

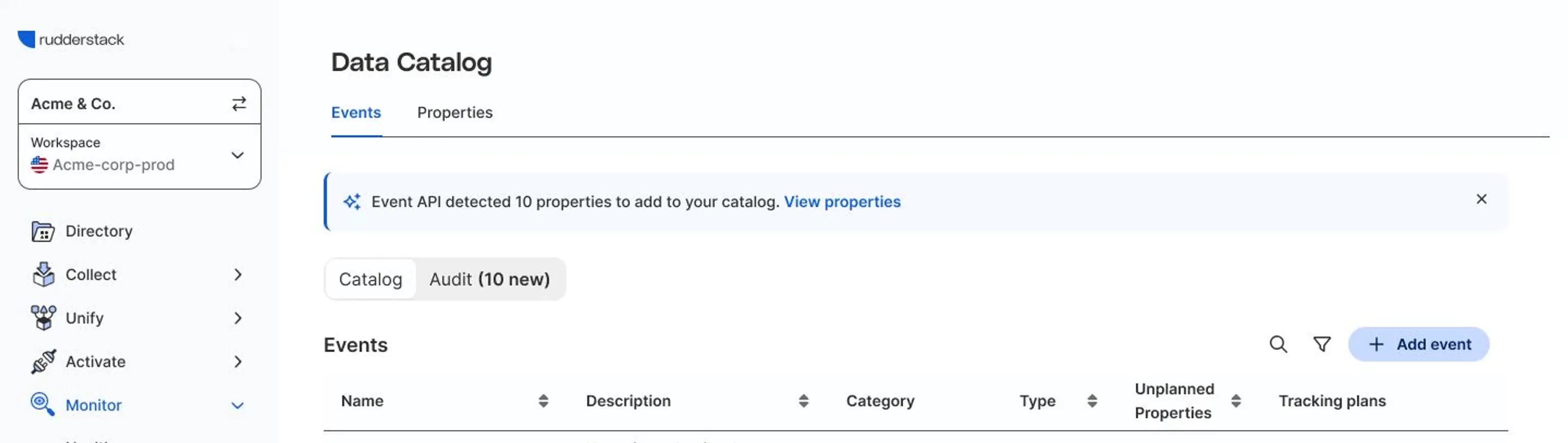

Collaborative event definitions

Gone are the days of out-of-date spreadsheets and documents. Our data catalog makes it easy for you to collaborate with business teams on schema definitions for events and properties. You can use it to align the business on definitions, keep everyone on the same page, and track changes as business requirements evolve.

To support more advanced workflows, our data catalog also allows your team to access and update the catalog through their existing workflows using our Event Audit API.

Data catalog sneak peek

Read the launch blog for a Data Catalog deep dive -->

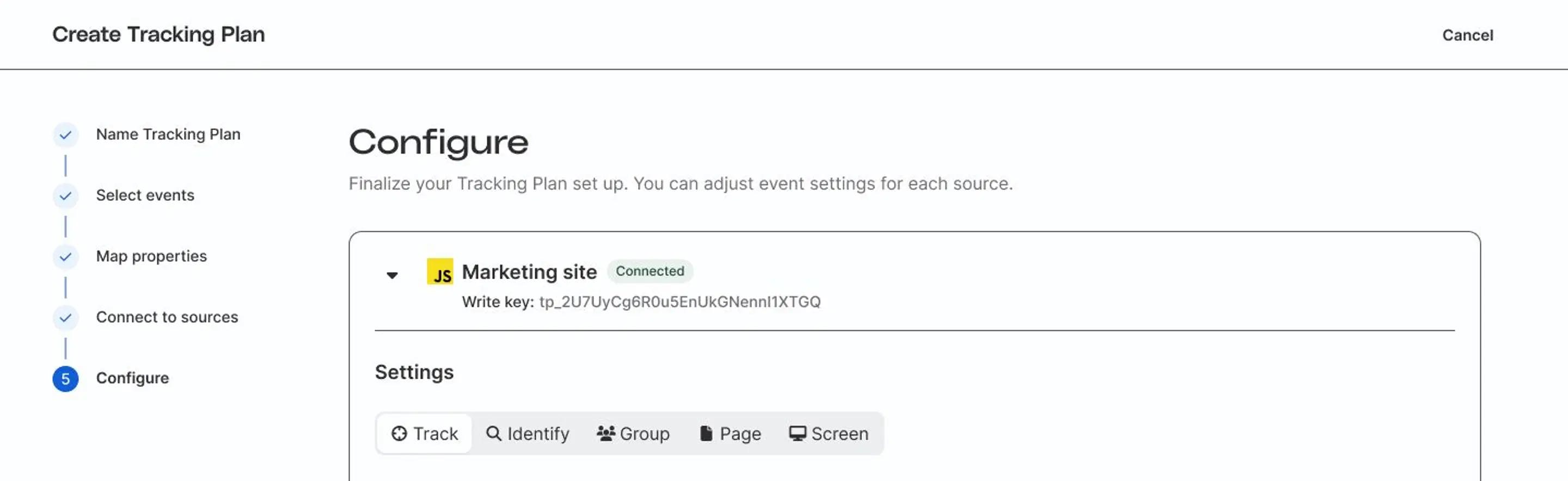

Violation management

Once you establish event schema definitions, you can create Tracking Plans that validate each incoming event, automatically flagging violations at any level of detail (even with nested properties!).

When events violate the tracking plan, you can apply custom rules on a per-source, per-event, or per-property basis to meet any downstream business need, whether that means dropping events completely or propagating error messages in the payload.

Tracking plan sneak peek

Read the launch blog for a Tracking Plans deep dive -->

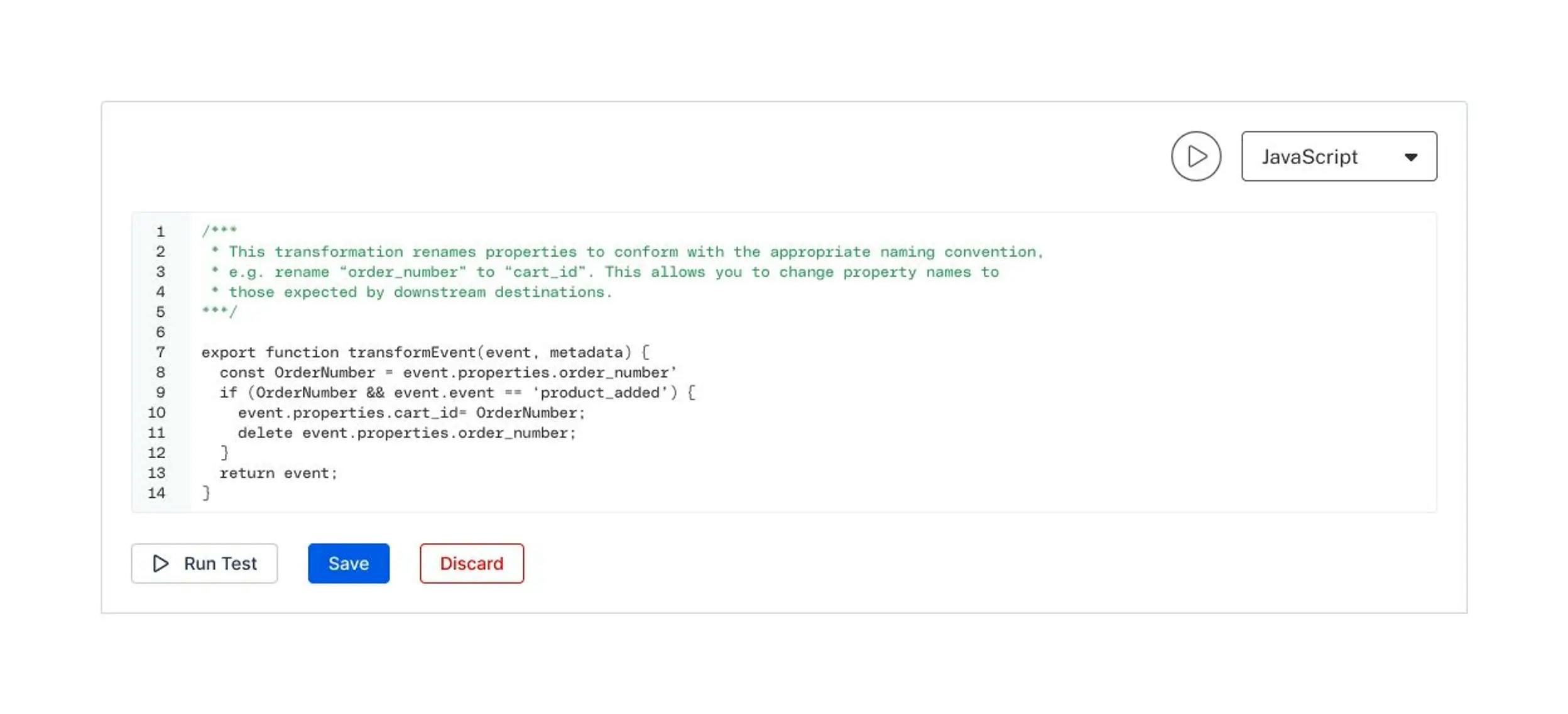

Real-time schema fixes

When you catch violations, our toolkit makes it easy to apply fixes to correct the bad data. Instead of facing weeks of data cleanup in the warehouse, along with dev tickets and sprint cycles to get instrumentation updated in a website or app, you can use Transformations to fix schemas in real time before they reach downstream destinations.

Real-time schema fixes sneak peek

Read the launch blog for a Real time schema fixes deep dive -->

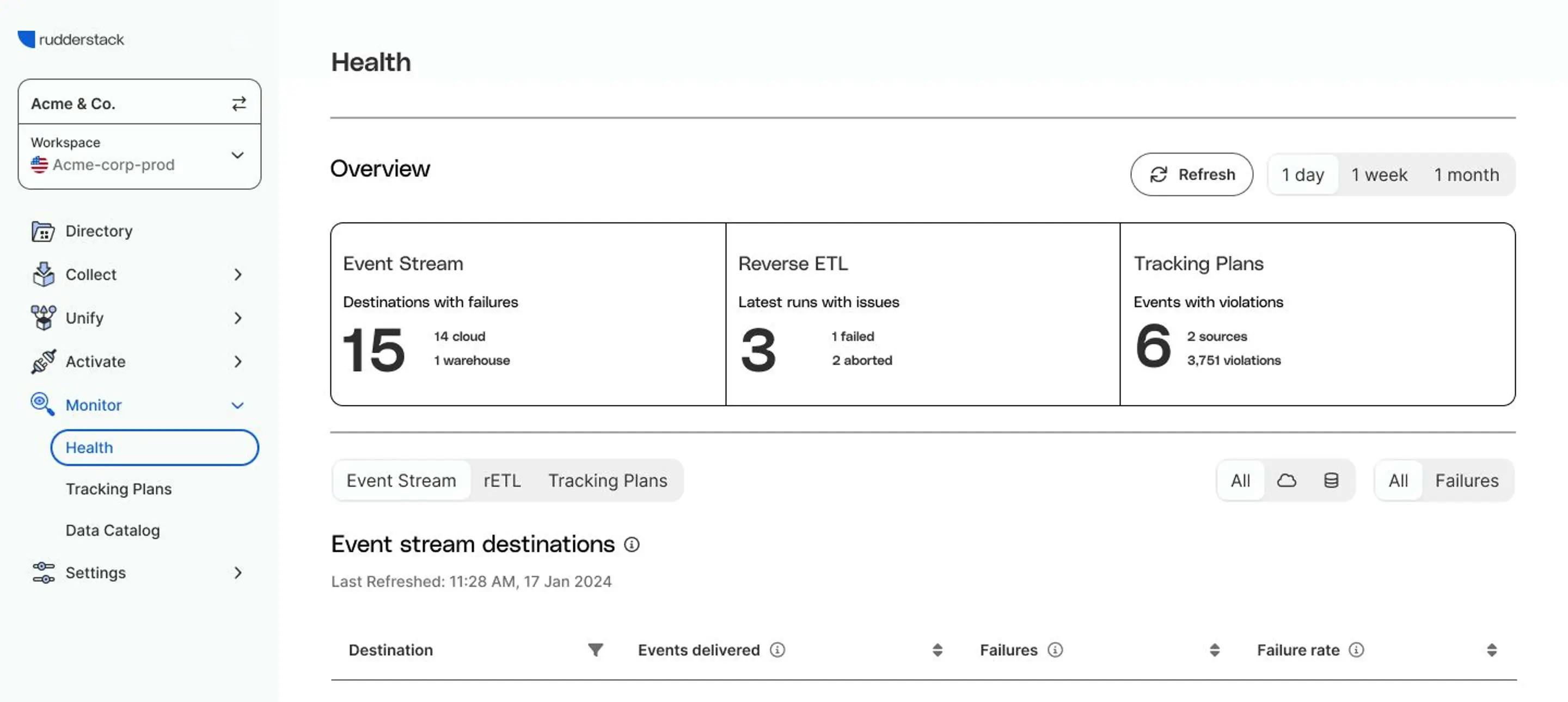

Monitoring and alerting

To empower your team to focus on their most important work, you have to trust the data quality measures you have in place and establish processes to trigger efficient troubleshooting when required. That’s where our health dashboard comes in. It gives you a global view of your entire customer data stack, providing a central place to monitor the health of your data sources and destinations. It also gives you visibility into any tracking plan violations. You can use it to apply custom rules for alerting to any source or destination and route alerts to your tool of choice, whether that’s Slack, PagerDuty, or another tool.

Health dashboard sneak peek

Read the launch blog for a Health Dashboard deep dive -->

Stay tuned for deep dives and demos

Data quality issues shouldn’t compromise your ability to turn customer data into competitive advantage. If your team is struggling to do their best work because they’re busy dealing with bad data, taking action to address the issue at the source can put you on the fast track to success.

Using our data quality toolkit will make it easier for you to deliver powerful projects like churn prediction and personalization systems that will create leverage for your business. If you want to see these features today or have them enabled on your account, request a demo with our team. For more details on each feature, sign up for our webinar and follow us on Linkedin to get notified as we post deep dives next week.

Recent Posts

Addressing Segments limitations: from data storage to data strategy

By Mackenzie Hastings

Feature launch: Append and table skipping for warehouse FinOps

By Badri Veeraragavan, John Wessel

Building the Data Foundation for AI

By Soumyadeb Mitra

Get the newsletter

Subscribe to get our latest insights and product updates delivered to your inbox once a month